Tools solve a problem within a set of constraints. In the Journey of a tool note, we discussed that tools are raw by themselves, value emerges from blending them into an experience and building an ecosystem around them. An ecosystem acts as a fertile base for building higher abstractions. We can compose tools together to build complex workflows.

This article continues exploration of tools to era of doing more with AI. We will examine how such tool usage works in an AI first app. And few bits and pieces of learnings in building such a system.

Let’s start with a bit of history and define a few abstractions.

From tools to functions

We’ve been using concept of digital tools perhaps since the early days of Unix.

An operating system contains a suite of command line tools like ls. These

tools are interoperable via the standard input/output streams, shared memory and

so on to achieve tasks beyond the functionality of a single tool. For example,

to count lines in a file we run cat file.txt | wc -l.

Decades later we started building large distributed systems where APIs took the place of tools. A combination of APIs stitched together across services far more complex workflows than any single service 1. During this evolution, we also learned to keep the API specifications separate from their invocation. This allowed us to connect heterogeneous systems together.

Current evolution with GenAI brings several new challenges compared to the predecessors:

- AI models lack factual accuracy and depend on tools to perform various actions. We cannot embed all the knowledge at training time 2. We need the ecosystem of tools now more than ever.

- Data and control flows in API composition is now changing from deterministic and imperative to a non-deterministic free flowing model. Natural language is immensely more expressive and can create numerous permutations of the control flow. Earlier this was limited by the developers and programming language constructs.

- Shift in the actor from human to an AI agent. Conventionally the “point and click” approach is now getting transformed into a “command, consult and partner” model. It is hard to understand intent: a conversation can interleave between “help and support” to “queries” to “write” operations.

We must skill up the AI with tools to help perform actions for us 3. Second, we must define newer abstractions to help AI models reason over the free flowing natural language. It must decide which APIs to invoke, when to invoke and how to invoke based on context.

Luckily, we can stand on the shoulders of earlier service-oriented patterns. But before we dive deeper, let’s look into an example first.

An example conversation

Let’s take a chat between User and AI Assistant and use it as a reference for discussing the various challenges.

// Example query or read operation

USER: Are there any traffic congestions on my way to Work?

ASSISTANT: I see a road construction in-progress near the RKM Highway with

delays up to ~20 mins. I'd suggest taking the longer route via Semino Mall road.

You can reach Work by 9.10 AM. Would you like to open the directions in Map?

// Example command or write operation

USER: Add a new contact for Ramize Peth with details from my last phone call.

Send an invite to join on WhatsApp.

ASSISTANT: Sure, I will perform below operations. Does this look right?

* Add contact: "Ramize Peth", phone: 9876512340

* I will invite phone number 9876512340 to connect on WhatsApp

USER: Looks great

ASSISTANT: Done! Here's the new contact profile:

https://go.codito.in/contacts/ramize. You can see the WhatsApp invitation in

the app. Is there anything else you need help with?How do you design a system like above? Let’s look at a mental model from Lilian Weng’s blog post (link below, highly recommended read).

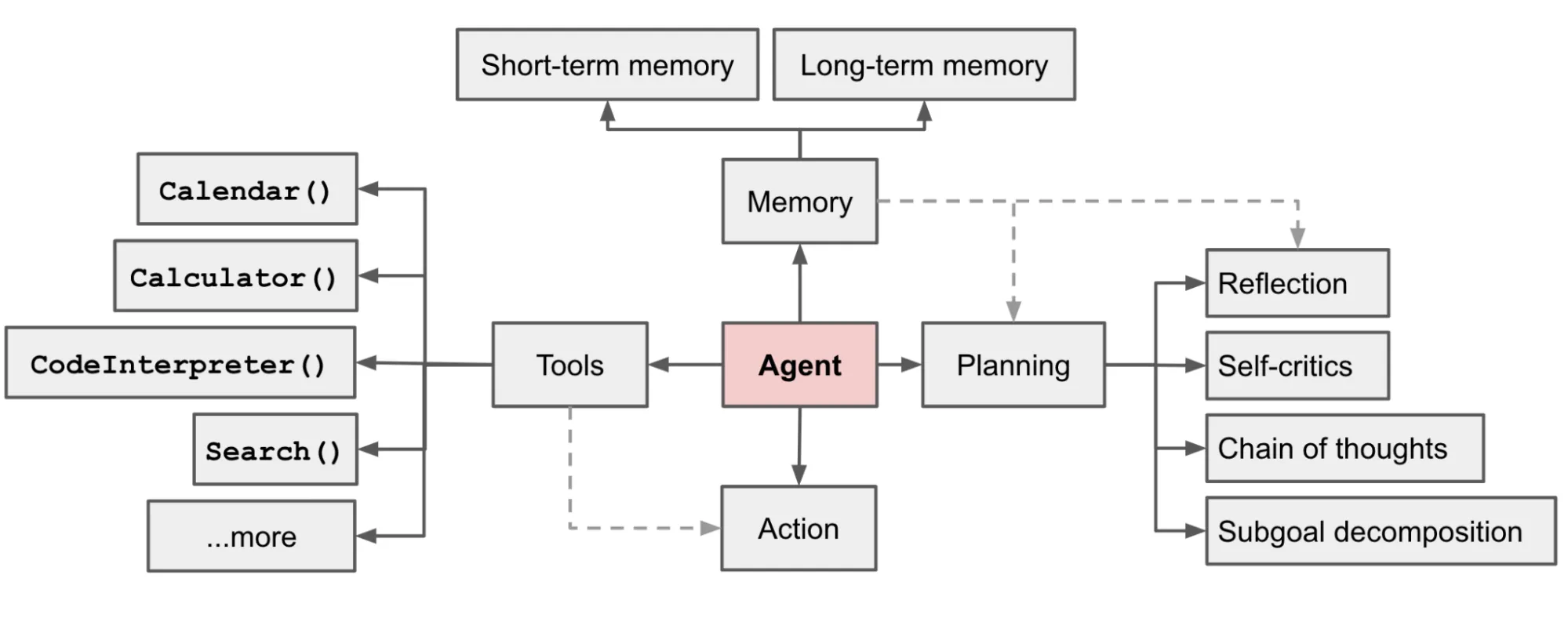

In the context of our discussion, there are three steps:

- Expose a set of Tools to the Agent to aid reasoning in its Planning step.

- Invoke the Tool whenever Agent directs us.

- Inform the results of Tool invocation to the Agent to enable storing it in Short-term memory (for the turn).

Let’s dive deeper in the above order.

(0) Context for the AI

// Snippets of user queries to examine the context required

USER: Are there any traffic congestions on my way to Work?

...

USER: Add a new contact for Ramize Peth with details from my last phone call.Our complexity starts with mention of references like Work. To reason over this we need to provide the AI model “facts” about the User stored in Short-term memory. For example:

- Date and time of User, location specific details

- Home, Work and other details

Depending on the conversation context, the set of tools available to the AI model may change. This set is also part of the Short-term memory:

def get_routes(start: str, dest: str) -> RouteInfoto fetch available routes along with alternatives.def get_call_details(start: str, end: str) -> list[CallInfo]to fetch details of phone calls along with timestamps.def add_contact(first_name: str, last_name: Optional[str], phone: Optional[str]) -> ContactResultto add a contact to address book.def send_whatsapp_invite(phone: str) -> boolto send WhatsApp invite.

Using the Long-term memory, the AI model can refer to past conversations for retrieving facts, or for similar reasoning traces - actions and observations.

(1) Reason with function specs

Function specification defines “when to invoke” and “what parameters to invoke with”.

The AI model is fine-tuned 4 to invoke tools only when control flow

requires an operation matching the function description. A second

responsibility is to extract the parameters from CONTEXT i.e., conversation,

or a document etc. and supply them to the function call.

During pre-training the LLMs learn from large corpus of reasoning, code and so on. For solving mathematical problems, one trick is to ask LLM for generating a code fragment and the running it in code interpreter to ensure accuracy. Likewise, we can use pseudocode to represent the various elements like context, short-term memory etc. This is the essence of prompting.

We expect the AI model to perform following reasoning inline with User queries.

// Prompt goes like this...

Given the below `CONTEXT` with set of functions you can invoke, choose the

function calls that can help with USER query.

# Examples

// Few shot examples of conversation and context.

# Context

CONTEXT = { ... } // context is a pseudocode data structure

# Conversation

USER: Are there any traffic congestions on my way to Work?

// Reasoning segment is not shown to the User but is a necessary part of the

// Conversation flow.

INTERNAL REASONING: User wants to retrieve the details of routes and traffic

events. Looking at the available functions, `get_routes` is most appropriate. I

will use `start` location as "Ayur Street, Rampally" from `CONTEXT` and `dest`

is "Femur Street, Rampally" from Work location in `CONTEXT`.

INTERNAL FUNCTION CALL: get_routes(start="Ayur Street, Rampally", dest="Femur

Street, Rampally")Prompt section in lines 1-8 are the in-context learning elements. It helps the AI model use a specific generation pattern for this reasoning problem. In the examples, we can illustrate the reasoning logic with Chain of Thoughts, Tree of Thoughts, ReAct etc.

Internal Reasoning section in lines 19-22 is the traces from the reasoning

heuristic we instruct the LLM to use. We’re using simple Chain of Thoughts in

this example. Core idea is to force LLM to look for specific details in various

parts of the CONTEXT to generate a function call.

Internal Function Call is the output of reasoning.

How do we provide function details in the CONTEXT?

// Example inspired from the glaive-function-calling-dataset-v2. See

// https://huggingface.co/datasets/glaiveai/glaive-function-calling-v2.

//

// Format popularized by the OpenAI function calling. See

// https://cookbook.openai.com/examples/function_calling_with_an_openapi_spec

CONTEXT = {

"functions": [{

"name": "get_routes",

"description": "Get route and traffic events between two locations",

"parameters": {

"type": "object",

"properties": {

"start": {

"type": "string",

"description": "Complete address of the starting location."

},

"dest": {

"type": "string",

"description": "Complete address of the destination location."

}

},

"required": ["dest"]

}

}]

}Did you notice the striking similarity between function spec above and the OpenAPI specification (example)? This is exactly where we stand on top of the work done by our predecessors. We kept the “function specification” separate from the “function invocation” and have taught the AI models to reason with specifications alone.

Challenges

- Does this method scale? How many functions can we specify in a prompt? OpenAI supports up to 128 functions as of the time of writing (early 2024).

- Will there be collision between the functions specified? E.g., imagine we

have

get_routesandget_offline_routes. Will the AI model be able to decide which to invoke & when? - Along similar lines, can the AI model invoke functions in parallel, or in a specific sequential order?

In the reasoning step, the AI model also extracts various parameters for the

function. Again CONTEXT is the primary source along with the pre-training

knowledge. We’ll set aside the challenges for now and dive deeper into it in a

subsequent note.

Now we know which function can help us find the appropriate response to User query. Next, let’s invoke it.

(2) Invoke with function execution

Function execution defines “how to invoke” the function.

We have two primary approaches:

- Client driven or Local invocation: AI model provides function signature to invoke, and the client app reads the function manifest and invokes it.

- AI model driven or Remote invocation: AI model is provided the entire function manifest, it invokes the functions remotely using appropriate auth etc.

Client driven approach is more capable since we can use it for invoking local

binaries like ls etc. on the operating system. It is more complex too.

How does the function invocation work?

In the reasoning step, the function signature is hypothetical. Our first action is to convert the signature to a callable structure. This may require creating a sandbox environment, validating that the function signature is correct, and then invoking the function.

INTERNAL FUNCTION CALL: get_routes(start="Ayur Street, Rampally", dest="Femur

Street, Rampally")Here’s the heuristic in client app after the above output from reasoning step:

- Client app receives the above signature.

- Validate: we check if the syntactic structure of the parameters are valid. We may also want to validate the addresses provided for correctness.

- In case, the signature is inaccurate, we can attempt to auto-correct. And ask User for confirmation on what we’re going to look up.

- Transform: if the function call is implemented via a service provider, we translate the hypothetical signature to the actual REQUEST body.

- Invoke: using the appropriate authentication, invoke the remote API.

Query functions which retrieve data are easier to specify and execute.

What if the function call has side effects?

Command functions with side effects like changing the system state are harder. And once committed, difficult to undo. Usual paradigm is to present the write operation, and seek confirmation before persisting the change.

We need to tag functions which have side effects; and allow the function

specification to provide a what if argument. So for example,

add_contact("John Doe", what_if=True) will just present the structure of

operation for confirmation. And add_contact("John Doe", what_if=False) can

perform the operation after User confirmation.

We need a tight loop for such interactive conversations. Let’s dive deeper into these in the next step.

(3) Synthesize response from results

Result synthesize considers the function call output, and creates a response to answer the User query.

Let’s start with a complex example:

// Example command or write operation

USER: Add a new contact for Ramize Peth with details from my last phone call.

Send an invite to join on WhatsApp.

ASSISTANT: Sure, I will perform below operations. Does this look right?

* Add contact: "Ramize Peth", phone: 9876512340

* I will invite phone number 9876512340 to connect on WhatsApp

USER: Looks great

ASSISTANT: Done! Here's the new contact profile:

https://go.codito.in/contacts/ramize. You can see the WhatsApp invitation in

the app. Is there anything else you need help with?Assistant Response in lines 5-8 retrieves the Phone number from call history (a READ operation), and asks User to confirm the add a contact and send WhatsApp invite operations (WRITE).

Here’s one possible approach to achieve this in a Synthesis LLM prompt:

// Prompt goes like this...

Given the below `CONTEXT` and `CONVERSATION`, you have already invoked various

functions to retrieve data. Below are the output structures for each of the

functions:

- `def get_call_details(start: str, end: str) -> list[CallInfo]` to fetch

details of phone calls along with timestamps.

- `def add_contact(first_name: str, last_name: Optional[str], phone: Optional[str], what_if: bool) -> ContactResult`

to add a contact to address book.

- `def send_whatsapp_invite(phone: str, what_if: bool) -> bool` to send

WhatsApp invite.

// Include various rules to synthesize the response, tone etc.

If `what_if` is `True`, you must seek confirmation for the contact add

operation. Otherwise, summarize result of the operation.

# Examples

// Few shot examples of conversation and context along with responses.

# Context

CONTEXT = { ... } // context is a pseudocode data structure

# Conversation

// Other earlier turns in the conversation go here...

USER: Add a new contact for Ramize Peth with details from my last phone call.

Send an invite to join on WhatsApp.

INTERNAL FUNCTION CALL: get_call_details(start=2/24/2024, end=2/29/2024)

INTERNAL FUNCTION OUTPUT: { result: "success", data: [{ phone: "9876512340",

timestamp: xxx }, ... ] }

INTERNAL FUNCTION CALL: add_contact(first_name="Ramize", last_name="Peth",

phone="9876512340", what_if=True)

INTERNAL FUNCTION OUTPUT: { result: "require confirmation", data: { first_name:

"Ramize", last_name: "Peth", phone: 9876512340 } }

INTERNAL FUNCTION CALL: send_whatsapp_invite(phone="9876512340", what_if="True")

INTERNAL FUNCTION OUTPUT: { result: "require confirmation", data: { phone:

"9876512340" } }

ASSISTANT:Lines 2-16 above are the core instructions with details of various functions involved in this conversation turn. We also expand on various rules for response creation: when to ask for confirmation etc.

Conversation section in Line 28 contains the previous turns. For brevity and

accurate context, we should only include the User and Assistant messages.

INTERNAL FUNCTION* messages are relevant only for the current turn.

Lines 33-46 are the various function invocations. We’re expecting the functions to provide output in a pre-defined format (also outlined in the core instruction at the beginning of prompt).

Challenges

- We’re assuming limited set of function calls for a single turn. Does the response change with parallel vs sequential invocations?

- How do we handle errors in a function result?

- Will we have a scenario where Assistant needs to provide Visual output instead of Text based response?

We’ll not solve these challenges in current post, instead do a deep dive in the next few notes in this series.

Functions, Assistants and GPTs

We’ve expounded on several new mental models for an AI driven tool paradigm.

- Functions abstract the tools with a clear “specification” and “invocation” primitive.

- Context is part of the Short-term or Working memory of the AI model. It serves as the source of reasoning traces (or inner thoughts) for the current turn.

- During reasoning, we expect the AI model to identify which function to invoke and with what parameters.

- With invocation, we run the function, either locally in the Client app or remotely in the AI agent.

- In response synthesis, we look over the function call traces for a single turn and create an interactive or informational answer to the User’s query.

We started from first principles to arrive here. How does all of these relate to the zillion concepts in current commercial products?

Here’s how I’m reasoning through this from a scenario lens:

Use function calling API for client side tool invocation. You own the tool definitions, reasoning and synthesize prompts, conversation history etc. This is the most core API available for you to compose. Here’s the starting point: https://platform.openai.com/docs/api-reference/chat/create#chat/create-functions.

Use assistants API if you wish the AI model to manage conversations for you. Start here: https://platform.openai.com/docs/assistants/how-it-works.

- You provide the SYSTEM prompt instructions, Tools, Knowledge (long-term memory).

- Create a Thread with User message, ask the Assistant API to run and provide a response. You do not own the reasoning, or the synthesis prompts.

- AI owned tools like Code Interpreter, or Knowledge retrieval trigger automatically.

- Custom function calls require a handshake. API invokes a callback, you invoke the function, and give the output to AI.

Use GPTs to create assistants without writing code. Start from this: https://platform.openai.com/docs/actions/introduction.

- You provide SYSTEM prompt instructions, behavior for the GPT.

- You provide a set of APIs the GPT can invoke. Setup the authentication information and API specifications.

Also, check out https://help.openai.com/en/articles/8673914-gpts-vs-assistants for the official differences between GPTs and Assistants 😄

Conclusion

Agents bring in intelligence. Tools contribute actions.

Agents decide sequence of tools. Tools gather knowledge, or operate on the environment (write actions).

Agents learn from the environment, and their context (short and long term memory). Tools are imperative, they do as told.

Agent frameworks will be a commodity. It’s the same “reason, act and learn” loop over and over. The differentiator will be context for short-term decisions, knowledge for long-term decisions, and the tools expanding the reach of an Agent into unknown territories.

Build tools that are unique, or behaviors that can guide an Agent in your domain.

Thank you for reading through this long note. I will follow up with deep dives into the challenges, and who knows we may fine-tune a toy model someday 🚀

Footnotes

-

Erstwhile SOAP, WCF, and now REST with OpenAPI allow the developer to express the API definition in a declarative form. Developer tools parse these specs and create client libraries to interoperate across systems. Moreover, apps like IFTTT allow creating chains of automation connecting various services and their artifacts. Workflows took the place of individual tools or APIs. ↩

-

As per the model card shared by Meta, it may cost ~5M USD to train the 7B, 13B and 70B variants of the Llama 2 AI model (source). Even with all the cost and energy spent, the knowledge is static and get outdated. We cannot depend on AI models for retrieving facts. ↩

-

Skills can use one or more APIs or tools. Tools come in two varieties:

- Neural tools use machine learning and AI to process information. E.g., pattern recognition, speech etc. They’re usually blackbox and lack interpretability.

- Symbolic tools are rules and logic based. They’re transparent, interpretable, and we can reverse engineer their output to the underlying rules and heuristics.

-

An intuition on when to use Fine-tune vs In-context learning (prompting) is this: Fine-tuning mutates the model capabilities - it can add behavior and can let the model forget old behavior. Prompting tries to leverage existing model behavior and guide it to follow a pattern. Teaching a model to learn how to call functions requires fine-tuning a base model. Off the shelves LLMs may already be fine-tuned for this behavior. We’ll cover more on fine-tuning in a subsequent post. ↩