Earlier this month I was invited to give a talk on the internals of Large Language Model based apps. Our objective was to share enough details for the non-technical audience to build a mental model of how things worktm inside an AI app.

With the same goal, I’ve tried to reproduce the content below. Do share any feedback you may have.

The transition

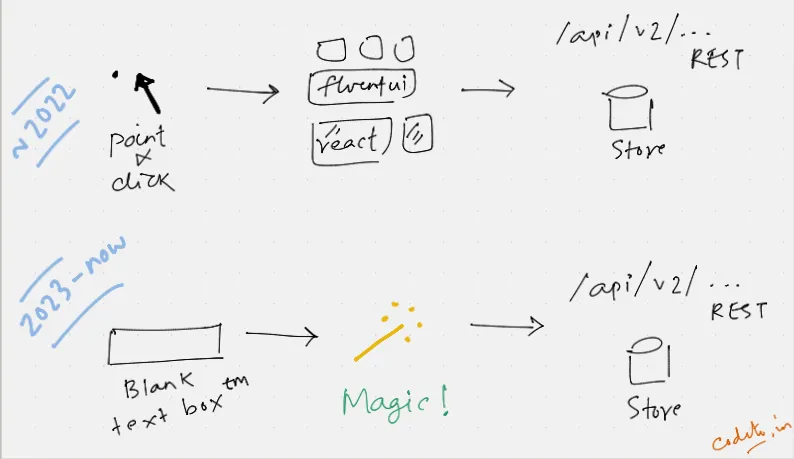

Our app development model has been the same since the 1990s. Point & Click experience has allowed to express our intent with clicks. Every click triggers a business flow, connects to a server and provides us with the outcome. In this model, the UI elements effectively define the affordances or things that are possible.

Circa 2023, the new paradigm mostly begins with a Blank Text Boxtm. We express our intent with natural language. Sometimes we don’t even know what to ask! Like an oracle with a magic wand, the app can answer most of the things we query.

If we observe closely, the business logic didn’t change overnight. We still continue to use the same REST APIs, or Service Oriented models underneath.

Let’s figure what the magic wand entails.

Patterns

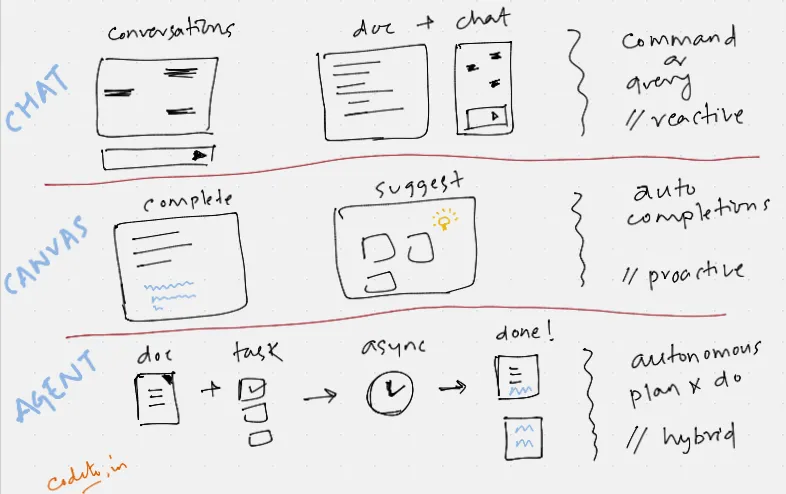

We will talk about three patterns of AI driven apps in the wild.

Conversational apps expect the user to provide a command or a query on the chat input. Sometimes the conversational interface is available alongside the existing app surface. In this mode, the user can use natural language to perform operations on the app surface (or the canvas).

Canvas apps sprinkle AI ambiently throughout the surface. This ranges from auto completions in code editors, or proactive suggestions in dense charts or reporting surfaces. Core value proposition is to meet the user wherever they are and help them excel at whatever they’re trying to do.

Agent apps run can take user input for guidance and can run autonomously. For example agent apps can act as the developer, QA and other roles to collaboratively build software projects. Or it could be simple single agent flows where we can ask the agent to perform tedious and boring tasks like reading large docs, finding key aspects and preparing summaries etc.

These patterns range from reactive (waiting for user input) to proactive (share insights with pre-computation). They can run adhoc/on-demand or always be running in the background, autonomously carrying out tasks.

What are the big rocks in building these?

Challenges

- A blank text box is a world of infinite possibilities. User can ask for the weather, or about work related data. App must choose the right tool to fulfill user intent.

- A point-and-click world implied deterministic and pre-determined control flows. We exactly know what a click will do. A text box is non-deterministic. We can’t say for sure what tools will get selected, and in what order. Stakes are high, and we can end up with a Frankenstein’s monster. App must decide a correct flow of control to achieve the objective.

- With a non-deterministic control flow, how do we know when to terminate? How does the app decide the info gathered is good enough to meet user’s query? Also interesting, how does the output of a tool flow into another?

- Pre AI world apps have an exact output. In contrast, an AI app must have a dynamic output, one that changes based on context and the query. How do we support dynamic and multimodal (image, text, UX controls) outputs?.

Building blocks

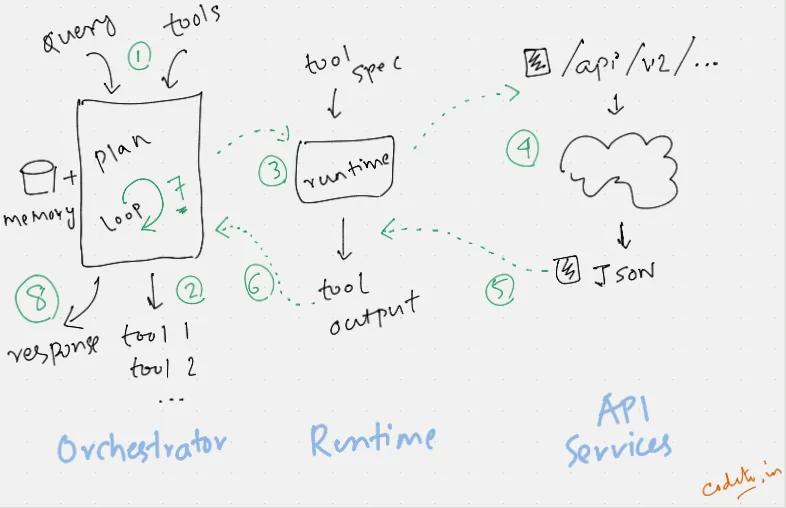

A typical flow in an AI app is triggered with two inputs query and a set of

tools which can answer the ask. We expect a response synthesized from one or

more of these individual tool outputs.

Orchestrators are the reasoning engine. They’ll start with various contexts like memory of past conversations, current environment etc. and the user query. Based on these, a set of tools are selected to fetch an answer. Upon getting the tool’s output, again they must plan if another tool needs to be invoked, otherwise they terminate.

Runtime engines can execute a tool locally (think of a commandline binary

like ls or cat) or like an API (think of the Weather APIs in

https://wttr.in). They’ll fetch the response and share it back with the

orchestrator.

API services are the gatekeepers of data. They provide unique capabilities, like finding the weather, or stock ticker etc. This layer of abstraction already exists today and powers the various point-and-click UX.

Here’s the flow in pseudocode:

# Pseudocode for the app

# See the figure above for various numbered steps

TOOLS = [add, multiply, ...] # list of tools available

MEMORY = [{user: "message"}, {bot: "message"}, ...] # conversation memory

TOOL_RESULTS = [{tool: output}, {tool: output}, ...] # list of tools & outputs

def plan(tools, tool_results, memory, query):

"""

Can we create a response for `query` from outputs in `tool_results`?

If yes -> return None

Otherwise

tool = find next tool to invoke

return tool

"""

pass

# Main planning loop inside Orchestrator

# Step (1): plan with tools & query as input

# Step (2): plan() returns list of tools

while (tools = plan(...) is not None):

for tool in tools:

output = tool() # Step (3): invoke the tools in Runtime

# Step (4): API is invoked

# Step (5): tools returns a response

MEMORY.append({tool: output}) # Step (6) & (7): append output to memory

# No more tools to invoke

# Step (8): create the final response

final_output = create_response(query, MEMORY, TOOL_RESULTS)What’s inside the plan() business logic?

Program synthesis

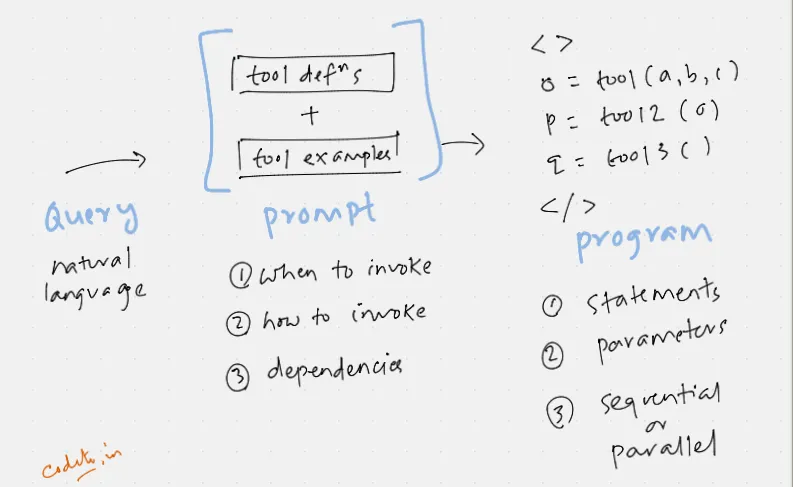

Program Synthesis is one of the mechanisms to convert a Natural Language query into a set of function calls. We try to leverage the code pre-training for a LLM model.

Tool definitions are created on a domain specific language. This

paper deliberates on the exact mechanism. For example, say our

domain is working on a Powerpoint document. Various functions we could define

are select_slides(), insert_slides(), format_text() and so on. Examples

are roughly based on the linked paper.

We’ve three tasks for the LLM:

- Convert the user query into an intent. E.g. “Add a slide on Christmas” ->

current_slide = select_slides(); new_slide = insert_slides(...) - Get additional context from user query. E.g., “Christmas” should be a

parameter to

insert_slidesto allow fetching the context prior to insert. - Connect multiple operations together in sequence or in parallel. This is

the actual program we’re talking about. E.g.,

insert_slides()requires the slide location to be selected withselect_slides().

An earlier post on Tools and Agents covers various details (including prompts!) of how an Agent (Orchestrator) reasons over the tools to choose the most appropriate one.

Conclusion

In the course of building AI apps, we have learned to embrace the world of non-determinism. We develop the tools and let the reasoning engine decide what combinations of these tools can fulfill an objective.

Thanks for reading this far!